Different architecture principle

Omega Server’s neural network operates on close to natural neuron principles and is based on the latest scientific knowledge in biology.

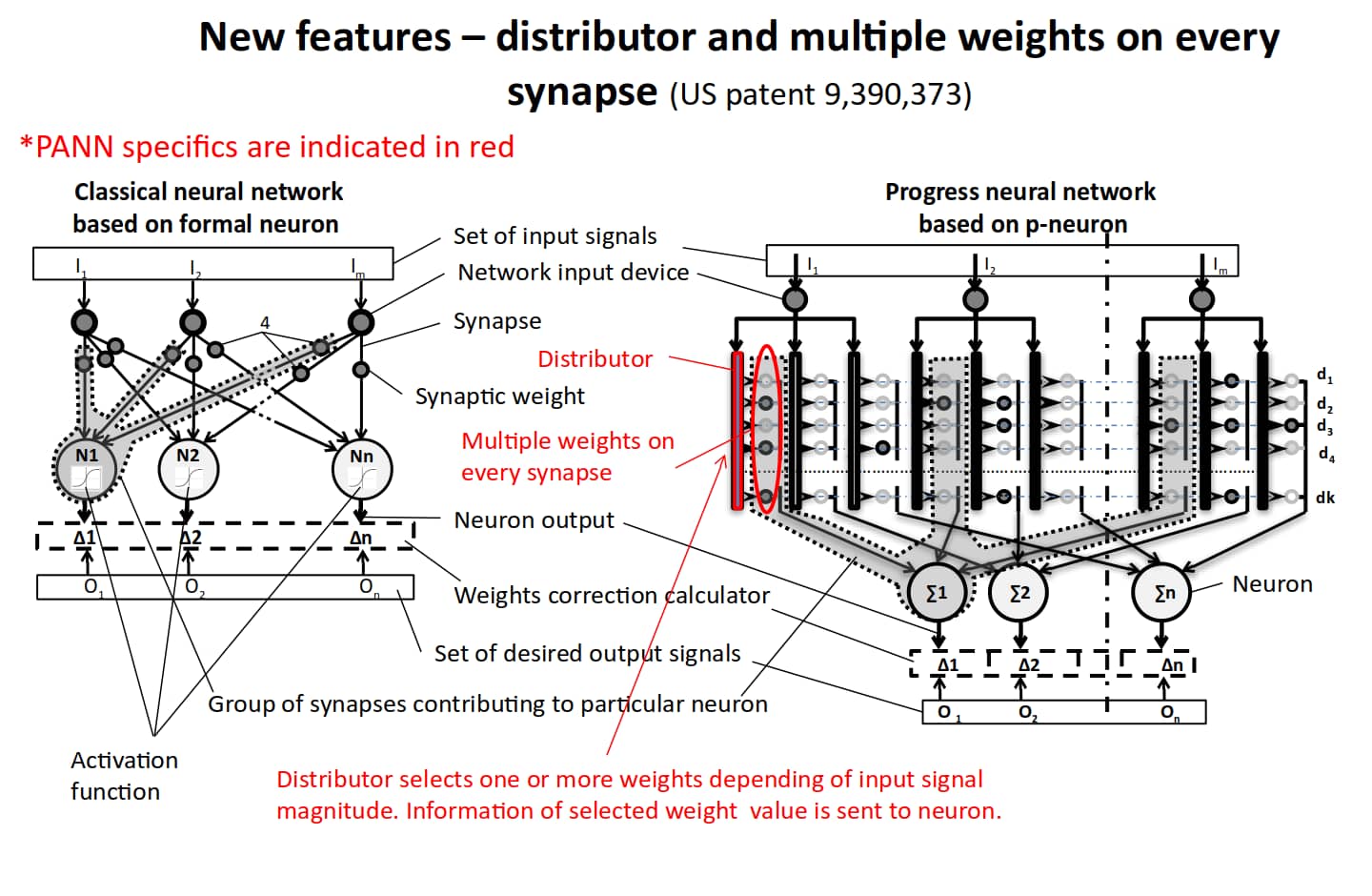

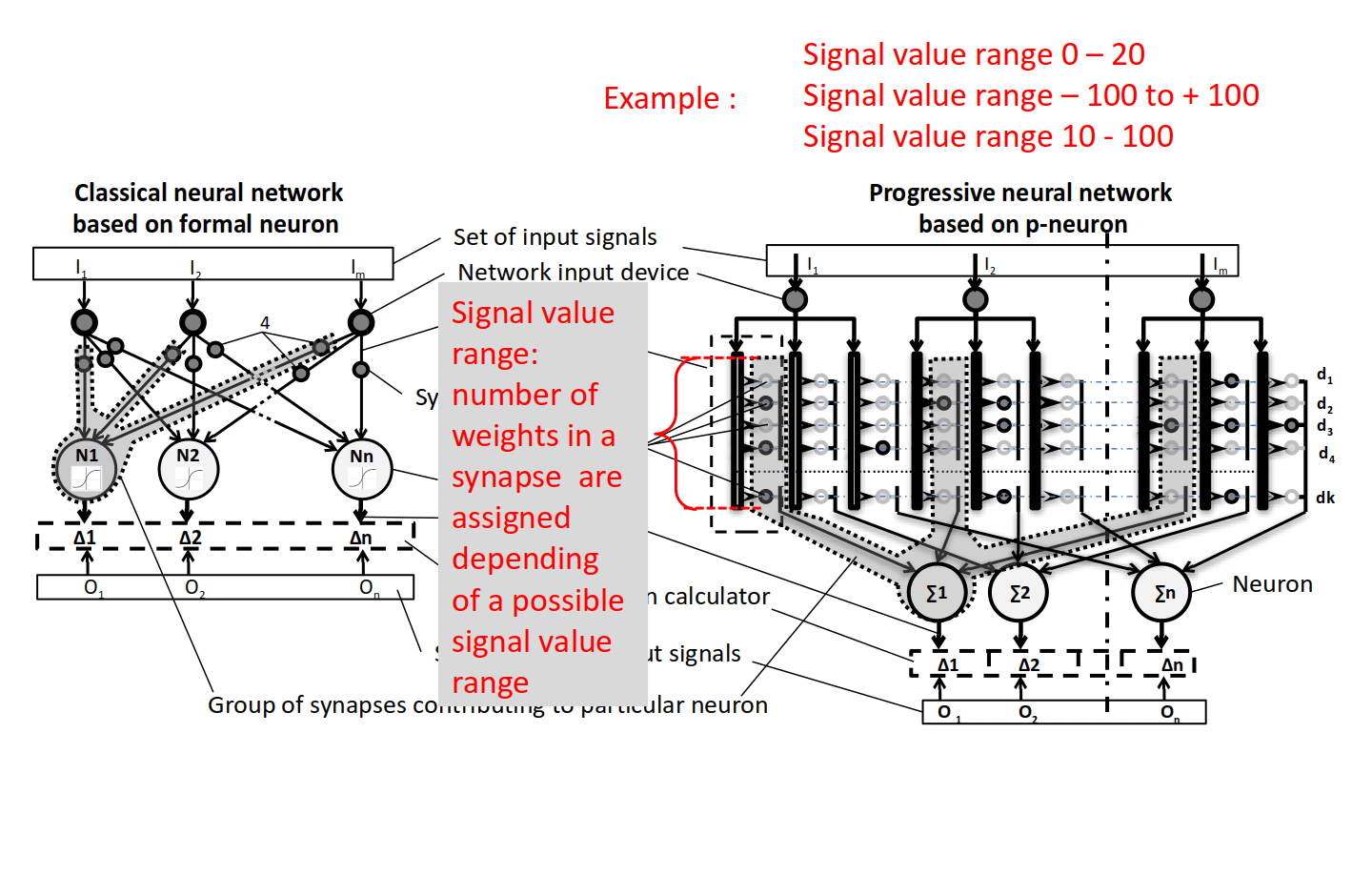

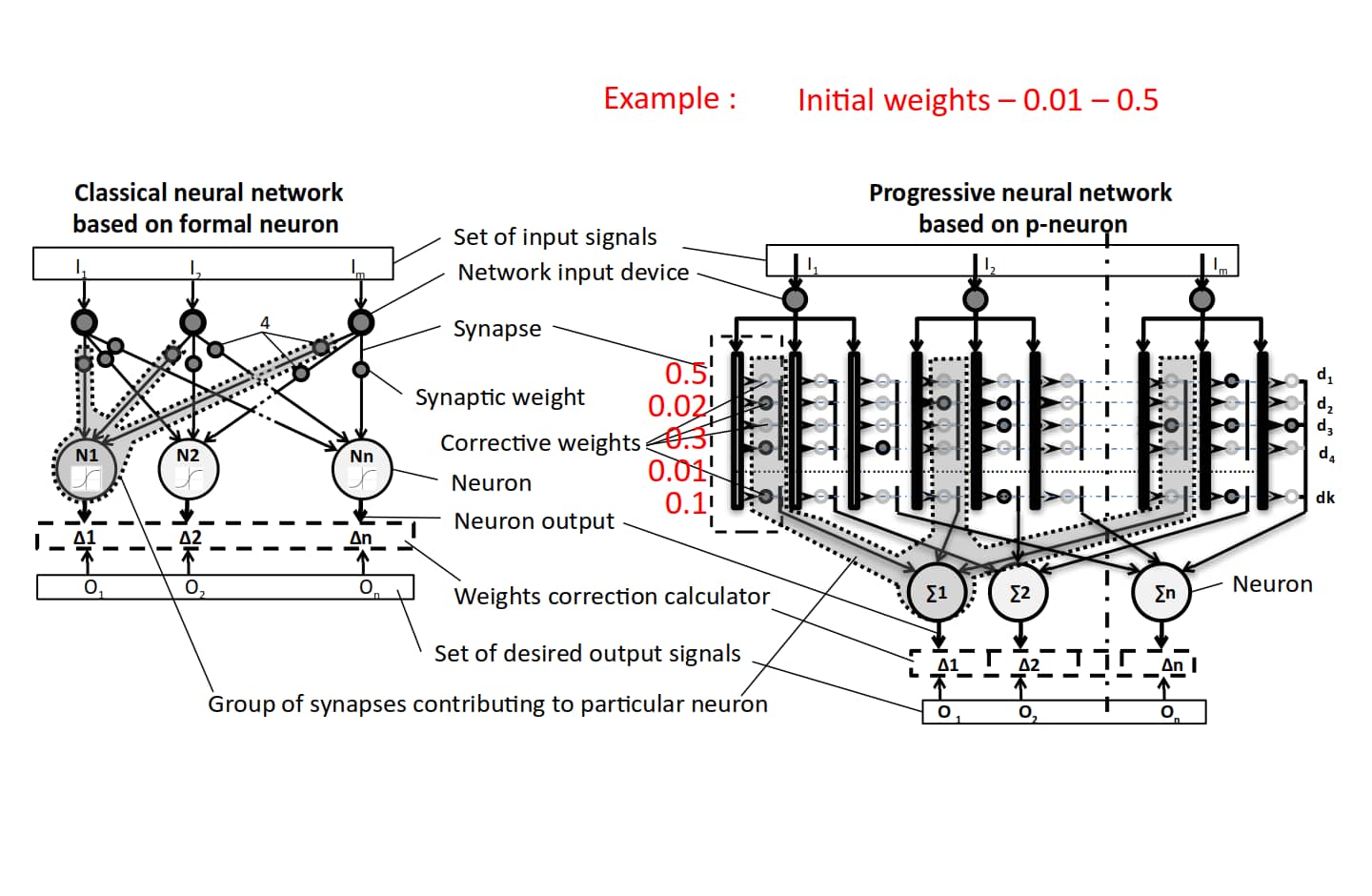

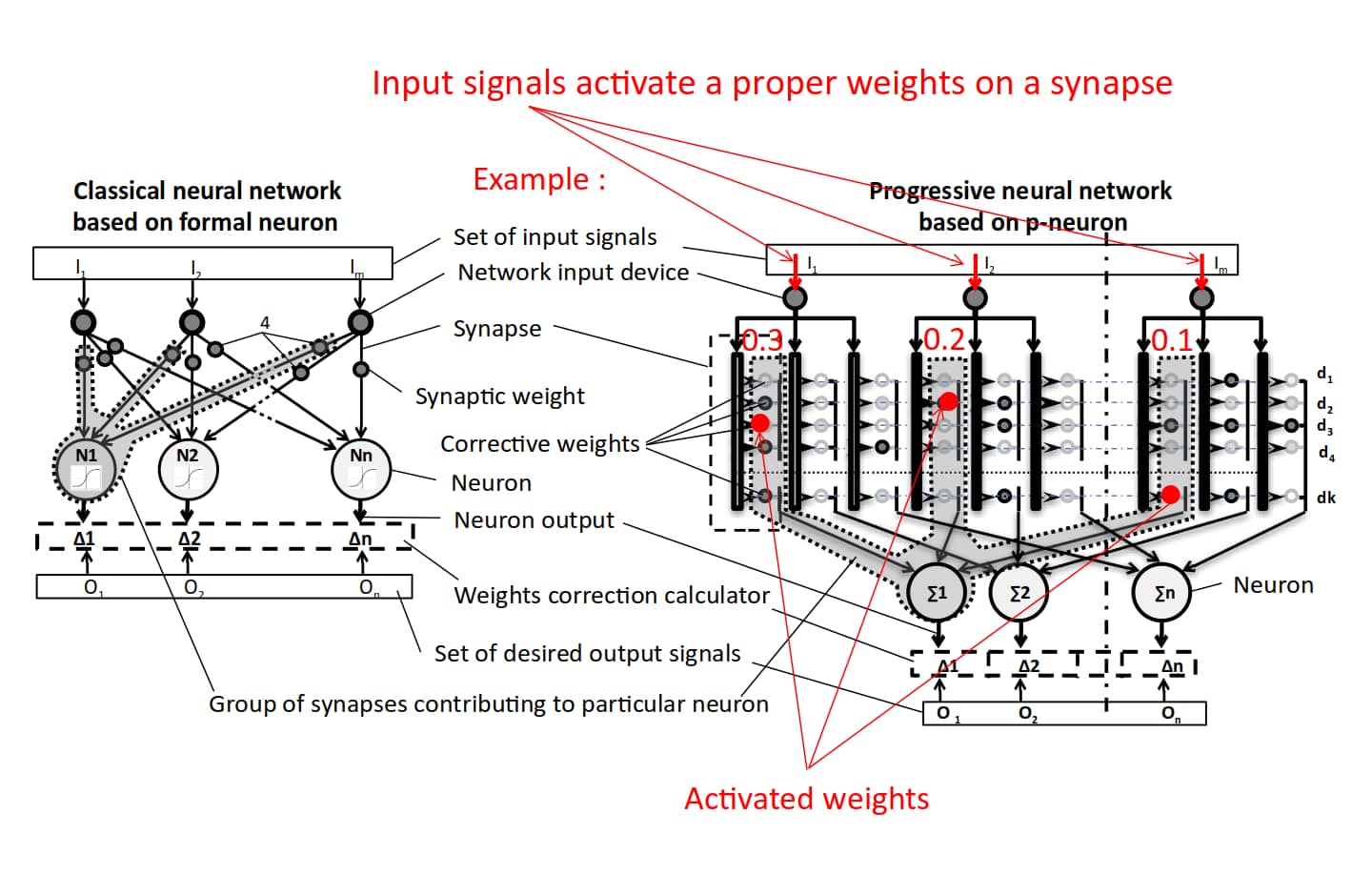

Much like the chemical synapse in human brains, the Omega Neuron uses many transmitters per synapse, multiple weights, and internal feedback.

PANN™ and its key differences from classical ANNs

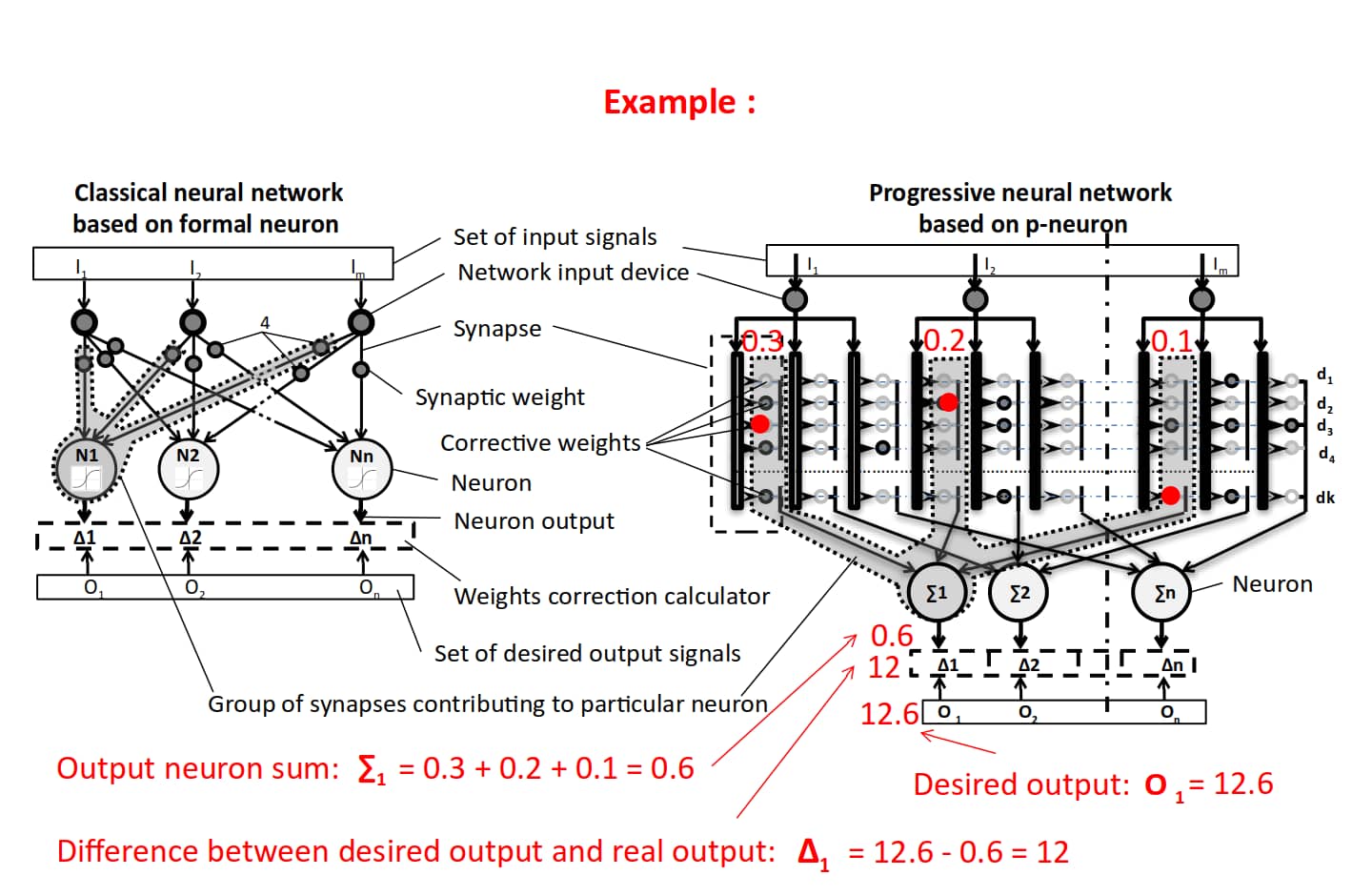

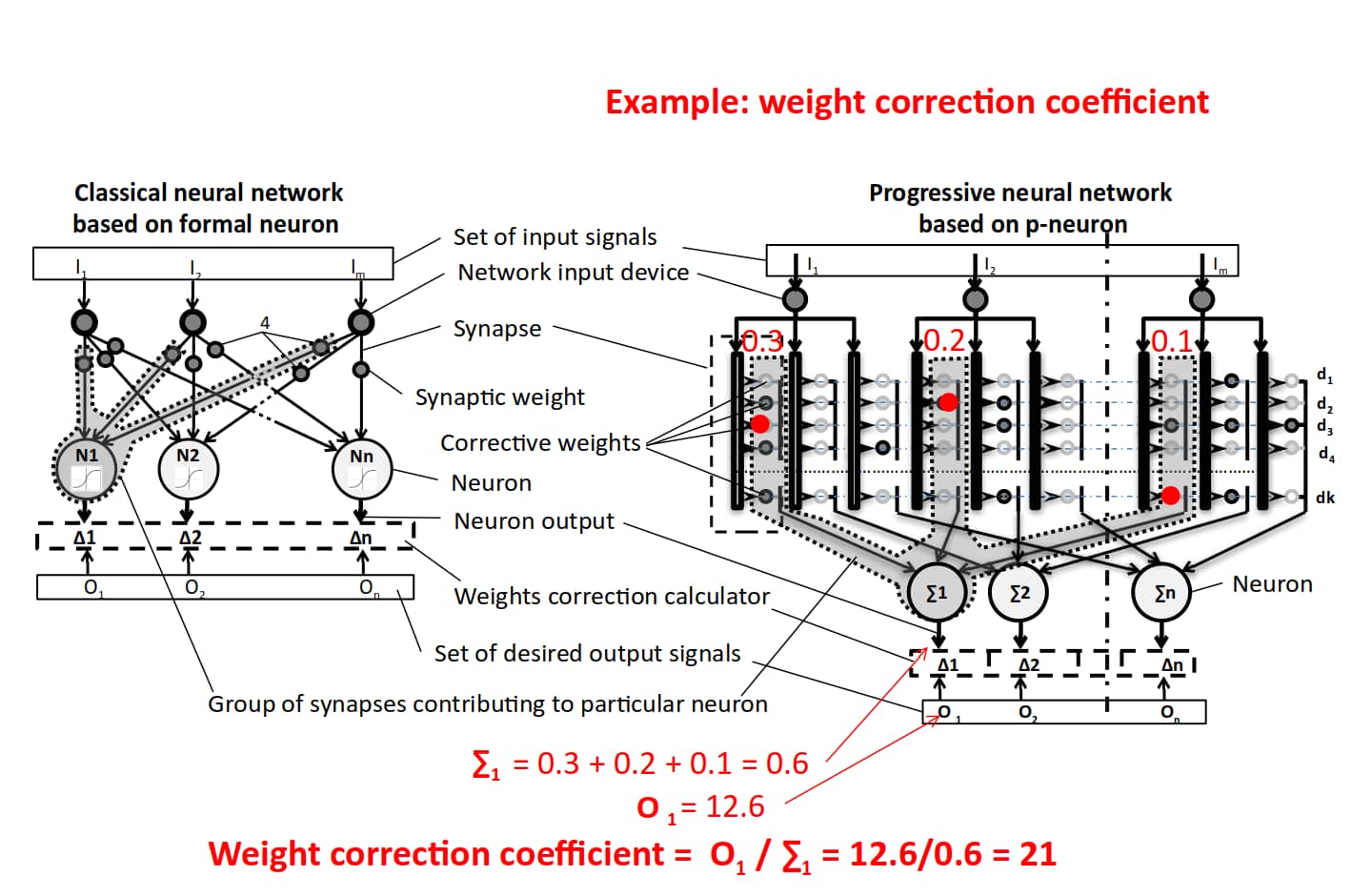

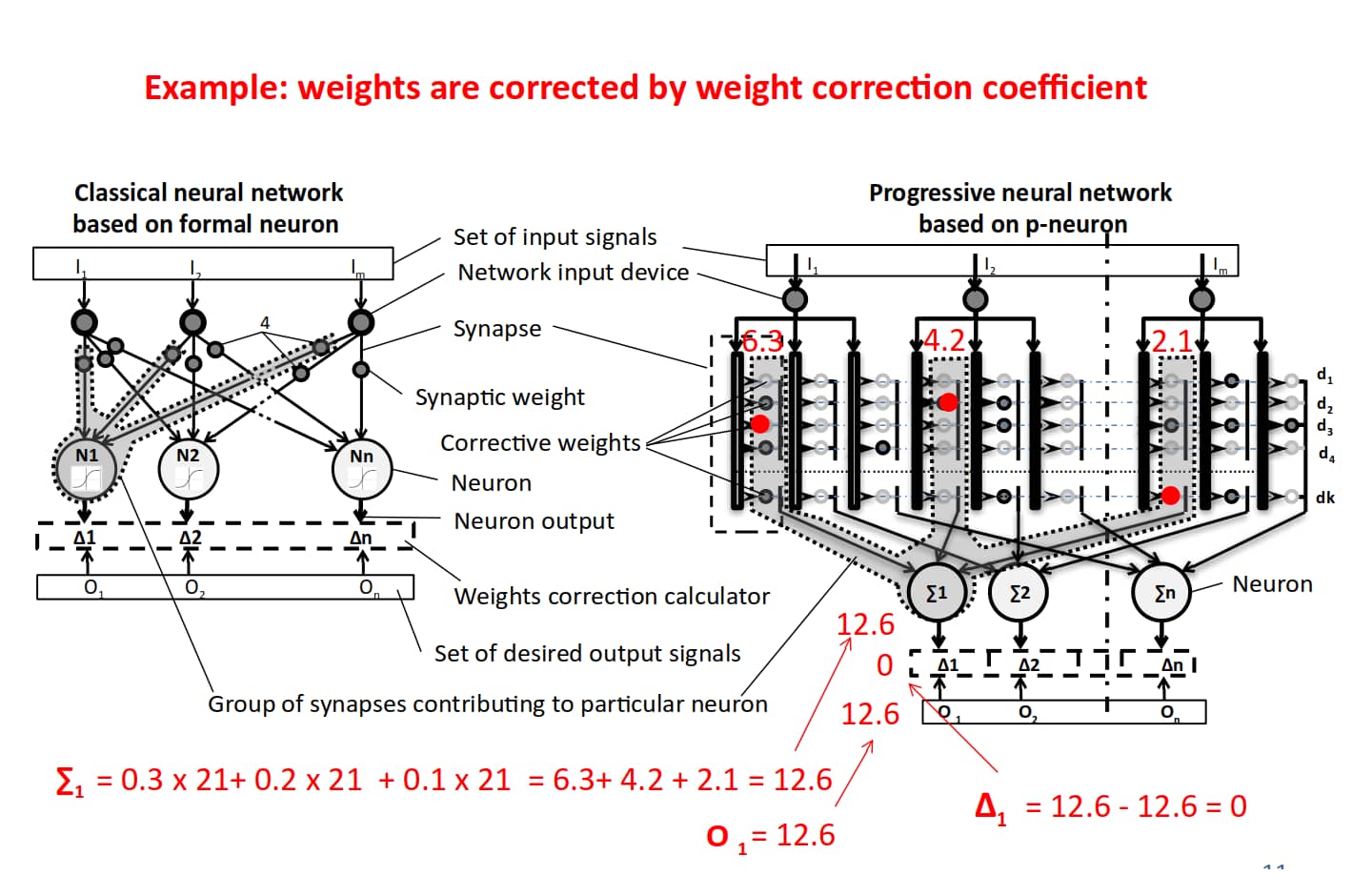

PANN™ training algorithm

- Correction of the Omega-Neuron weights, i.e., training is not accomplished by gradually correcting weight values for one image after another (gradual gradient descent), but with a single-step operation of error compensation during the retrograde signal. This takes into account only the information received by the neuron from its synapses during training.

- Training the PANN™ for the next image does not depend on its training for the previous image. Complete compensation of training error is provided for each image used in training.

- Complex calculations are not necessary.

- Multiple repeating calculations are not necessary.

- In its basic variant, PANN™ provides correction of all weights that contribute to the error on a particular neuron; correction is provided by using the same value for all active weights.

- In its basic variant, PANN™ has no activation function (or has a linear activation function), which drastically simplifies and reduces calculations.

- Calculations and weight correction may be performed using matrix algebra.

These advantages enable a thousand-fold increase in network training speed.